NextVPU breaks the Performance Records of AI Chips on the Edge Side, and supports "Algorithm Deployment Within 5 Minutes"

SHANGHAI,Nov. 12,2020 -- In less than five years after its establishment,NextVPU has reinforced its leading position in the computer vision industry. Its "blockbuster products" are N1 series computer vision chips. With the high Tera operations per second (TOPS) and powerful real-time data stream processing capability,N1 series chips support direct lossless deployment of the high-precision FP16 neural network model,high-performance INT8 network,multi-level fine-grain power control,and highly integrated design includingdual-core CPU,ISP,Codec,DSP,rich peripherals etc,thereby continuously breaking the performance records of AI chips on the edge side and achieving almost "lossless" precision. In addition,NextVPU has launched an easy-use AI deployment software platform Infer Studio™,which supports "5-minute deployment". Infer Studio™can directly resolve the pain points of enterprises that the Artificial Intelligence algorithms are difficult to be deployed.

NextVPU N1 Series Chips

N163 A

N161A

N160A

dual - core A5 @ 1G

dual-core A5 @ 1G

dual-core A5 @ 1G

4K @ 30p / 4x2M 30p

5M @ 30p / 2 x 2M 30p

4M @ 30p

ISP

ISP

ISP

H.265/JPEG codec

H.265/JPEG codec

H.265/JPEG codec

CVKit NN core

CVKit NN core

CVKit NN Core

- FP16 / INT8 / INT4

- FP16 / INT8 / INT4

- INT8/INT4/INT2

- 2.4 Tops CNN

- 1.2 Tops CNN

- 1.0 Tops CNN

dual - core DSP

DSP

DSP

BGA 17 x 17

CSP 14 x 14

CSP 14 x 14

N1 Series Chips' Spec

As every device goes smart,AI technologies have turned from academic research into practical use and become the engine to help dozens of industries to "improve quality and increase efficiency". Among all kinds of AI technologies,the computer vision AI technology is undoubtedly a breakthrough point to open the intelligent future. From driver assistance system to IoT equipment,from national economy to the people's livelihood,computer vision has been widely used in various industries.

As a battleship in the deep sea of computer vision,NextVPU is building intelligent solutions from both the hardware and software sides based on its AI chip products and the algorithm deployment platform Infer Studio™. With the help of the Infer Studio™platform,NextVPU's N1 series chips successfully support the simultaneous running of 11 AI algorithms in a consumer product.

Curiously,how is this result achieved? What benefits does the AI solution based on NextVPU's N1 series chips and Infer Studio™platform bring to customers? Among many AI chip players,what kind of product design ingenuity does NextVPU rely on to establish its own competitive edge?

Alan Feng,founder and CEO of NextVPU,unveiled the unique technologies of NextVPU's computer vision AI chips and the Infer Studio™platform.

Alan Feng,founder and CEO of NextVPU

1. Computer Vision Solutions with Hardware and Software Integrated

As revealed by Alan Feng,NextVPU's R&D team currently has more than 200 staff,of which the ratio of hardware engineers to software engineers is about 1:2.2. Based on the self-developed CVKit™NN IP,NextVPU has developed an AI chip matrix for different market segments,such as camera,vehicle,3D vision,and launched the AI application development platform Infer Studio™.

In the AI chip matrix,NextVPU released three computer vision chips (N163,N161,and N160) this year to provide 2.4 TOPS,1.2 TOPS,and 1 TOPS computing power,respectively. These chips can meet various requirements of machine vision,intelligent cameras,ADAS and AIoT markets. The Infer Studio™platform can help customers who without AI-related experiences quickly deploy AI & vision algorithms.

As told by Alan Feng,NextVPU's N1 series chips are not pure AI co-processors but highly integrated SoC chips,which are different from common computer vision chips on the market. This means that N1 series chips not only provide the basic computer vision chip functions such as signal sampling and signal processing,but also support more functions.

"Our chips adopt a very heterogeneous architecture. With excellent performance on signal sampling and signal processing,our chips also integrate a variety of peripheral interfaces and support many types of memory modules. Generally,N1 series chips are very comprehensive and robust SoCs",he said.

In addition,Alan Feng shared his opinion that only a highly integrated computer vision SoC was not enough to meet the market demand and the efficiency of hardware support for AI algorithms needed to be improved to extend AI algorithms to practical applications. He said,"to put what customers' desire into chips and selling them the chips is not realistic,and vendors actually need to bear a deep understanding of the applications and extension of AI algorithms."

Infer Studio™platform improves the efficiency of algorithm deployment by supporting not only neural network operations but also general mathematical methods. In practical applications,it would meet the requirements of fast iteration of user algorithms.

2. N1 Series Chips: Speed & Accuracy are the key performance metrics.

All AI applications are looking for ideal AI chips that achieve the balance among performance,power consumption,and cost. AI chips are measured from several aspects,such as the computing performance that can be obtained per dollar,the performance that can be obtained per kilowatt-hour (KWH) of electricity,the cost of algorithm deployment,and the stability and reliability of the chip itself. Among them,the computing power of each TOPS claimed by chip vendors corresponds to the actual number of frames processed per second (such as pictures or videos),and it is crucial to maintain the accuracy of the algorithm in the conversion from neural network model training to model deployment.

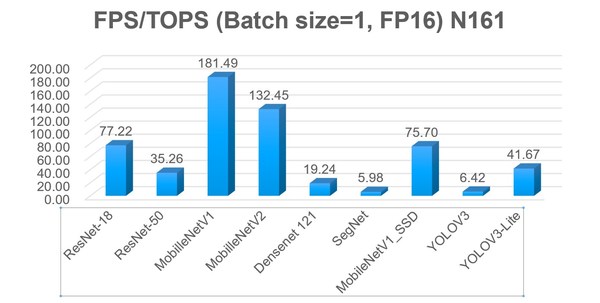

As shared by NextVPU,according to the test results of N161 chips on different neural networks,the number of pictures inferred by N161 chips per second per TOPS demonstrates an industry-leading level. In addition,the N161 chip also shows its robust performance on supporting the FP16 precision network.

Number of frames per second that can be achieved by N161 running on each network with the precision of FP16

When running neural network algorithms,benefiting from the brand-new architecture of CVKit™ NN IP,the CPU on the N161 chip does not participate in the calculation (the CPU usage is constantly less than 1%). Therefore,the computing power of the CPU has been completely reserved for customers to use.

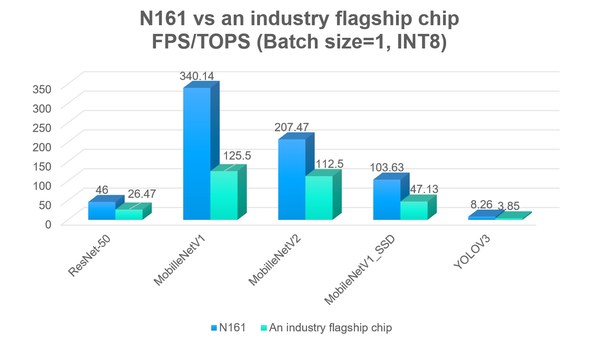

In terms of computing performance,NextVPU recorded the running results of the N161 chip on 9 neural networks,and compared the result with an industry flagship chip on 5 neural networks.

As shown in the comparison results,the N161 chip outperforms the flagship chip on all 5 neural networks of ResNet-50,MobilleNetV1,MobilleNetV2,MobilleNetV1-SSD,and YOLOV3.

Comparison between the N161 chip and the industry flagship chip running on the five neural networks

In addition to the computing performance,the computing precision is also a key indicator for measuring an AI chip. This is because the loss of accuracy caused by data type conversion will increase the cost in the process of neural network model training and deploying the model.

Taking Unmanned Retail Machine as an example,if the precision of the object(goods in this context) recognition algorithm decreases by 1% in actual application,the loss rate and the operation cost will increase significantly.

NextVPU recorded the test results of the N161 chip running on the 7 neural networks under the precision of PC NCNN FP32 and N161 board-level INT8,and calculated the precision loss when the model was quantized from FP32 to INT8.

According to the test results on the 7 networks,all the precision loss of N161 quantization from FP32 to INT8 is less than 1%. Therefore,the N161 chip supports the direct deployment of algorithm models on the FP16 network without precision loss.

Test Network

Network

Size

PC NCNN FP32

Precision

Board-End

INT8

Precision

GoogleNet_V1/Inception

v1

224x224x3

top_1= 0.661

top_5= 0.884

top_1= 0.653

top_5= 0.886

mobilenetv1

224x224x3

top_1= 0.698

top_5= 0.904

top_1= 0.695

top_5= 0.903

mobilenetv2

224x224x3

top_1= 0.713

top_5= 0.895

top_1= 0.711

top_5= 0.892

resnet18

224x224x3

top_1= 0.664

top_5= 0.889

top_1= 0.670

top_5= 0.886

resnet50

224x224x3

top_1= 0.743

top_5= 0.926

top_1= 0.743

top_5= 0.921

SENet-R50

224x224x3

top_1= 0.760

top_5= 0.936

top_1= 0.763

top_5= 0.935

squeezenet_v1.1

227x227x3

top_1= 0.577

top_5= 0.796

top_1= 0.577

top_5= 0.798

Almost no precision loss for the N161 INT8 quantization network

3. Infer Studio™: Resolving the Pain Points of Algorithm Deployment

The challenge of AI Deployment,on the one hand,is that the whole industry urgently requires "right" chips with excellent performance,cost,on the other hand,to deploy the AI algorithm in the applications demands high cost caused for acquiring proper AI expertise and skills. Currently,the latter has become a growing problem for AI deployment in the whole industry.

To solve this problem,NextVPU has launched the AI application development platform Infer Studio™. Infer Studio™can "translate" an algorithm into a file that the chip can "read" so that the algorithm can be deployed quickly. In this way,it provides developers with "one-click" development experience.

Taking an ADAS(Advanced Driver Assistance System) project as an example,in order to monitor whether a driver talks,phones,or dozes when driving a vehicle,the system needs to monitor the driver's eye status and whether the driver has a phone by his or her ear. Generally,it takes at least one week to deploy such an algorithm into the embedded system. In contrast,with the help of the Infer Studio™development platform,the algorithm can be compiled and deployed into the device quickly.

In this process,through the binding of modules,developers can flexibly combine functional modules based on their own application requirements,or delete,replace,and add some algorithm modules as required. All pipeline building work can be achieved through visualization or the configuration of a few lines of code,significantly reducing the time on developing the AI algorithm.

In addition to vehicle scenarios,the Infer Studio™development platform can also accelerate the process of algorithm compilation to application deployment for application scenarios such as object classification,face recognition,vehicle detection,and object segmentation,etc.

What black technologies does NextVPU provide to support the "one-click" development experience on Infer Studio™?

The Infer Studio™development platform supports mainstream frameworks such as TensorFlow,TensorFlow Lite,ONNX,and Caffe so that developers can choose them as required. On the software layer,Infer Studio™provides four function modules: Model Visualization,Compiler,Evaluator,and Debugger.

The Model Visualization module can convert the complex code of the algorithm network on the PC side into a network diagram so that developers can analyze the structure and attributes of the network intuitively.

The Compiler module can convert the algorithms developed based on the mainstream AI framework into files that can be read by AI chips,and complete the function of Compression at the same time. It further reduces the size of the algorithm model so that the algorithm can be deployed on the terminal devices with limited storage space,improving the network inference performance.

In addition,Compiler supports optimization options such as operator fusion and precompilation,further enhancing the inference performance to AI chips.

The Evaluator module can quickly evaluate whether an algorithm runs correctly on the AI chip and whether the chip's computing capability is fully utilized. In addition,it can visually present the algorithm effect of the network on the tested images through classification,detection,and segmentation.

The Debugger module can efficiently analyze the deviation and compatibility problems that may be encountered in the process of algorithm porting from PC to the embedded system. Users can export data of each layer in the operation of the algorithm and compare it with the corresponding layer of the original algorithm so that errors can be found and debugged at any time.

In addition,NextVPU provides technical support to customers according to their requirements in the implementation process of N1 series chips. "We maintain a very close interaction with our customers,and we have integrated many of their requirements into our chips and software tools," Alan Feng said.

4. Summary: Computer Vision Solutions with Hardware and Software Integrated

NextVPU,is originated from the meaning of "Vision Power Unlimited". In the surging wave of artificial intelligence,NextVPU is making its journey from computer vision to assume the role of "empowering" players in the fields of smart cameras,machine vision,ADAS and AIoT.

As a core player in the field of AI chip design,NextVPU not only makes continuous breakthroughs in core technologies of AI chips,but also provides customers with "one-click" development tools for comprehensive product support from hardware to software.

In addition to sharing NextVPU's thoughts on product design,Alan Feng pointed out that the computer vision market was still burgeoning,which means that computer vision chip companies like NextVPU cannot learn much experience from the industry but only explore and move forward on their own. It is expected that through the exploration of forerunners,such as NextVPU,AI vision can be applied in a wider range and help the industry improve quality and efficiency more significantly.